PAVE Note

Objective

The PAVE project pursues a far-reaching approach and proposes the concept of an operating system that provides a power-fail aware byte-addressable virtual non-volatile memory based on a combination of DRAM and NVRAM. The operating system manages the virtual non-volatile memory transparently for the machine programs (i.e., application processes) and tries, as far as possible, to take advantage of the persistence properties introduced by NVRAM. This integration of NVRAM into the VM level should pave the way – for application and system programs – for the use of NVRAM, both for legacy software as well as for newly developed programs. In addition, capacity scaling is to be supported and achieved by the operating system in a flexible manner in order to increase the performance and improve the energy efficiency of the computing system.

Memory-hierarchy integration

An “ideal computing system” is assumed that uses DRAM, if at all, only to hide the higher access times or higher latency that still exist with NVRAM. Similar to the way DRAM is already used as a cache for an ordinary VM subsystem based on a kind of block-oriented non-volatile backing store, it will now become a cache for a byte-addressable non-volatile main memory. Unlike approaches that require application-level software changes for NVRAM use, PAVE provides a transparent solution by default that automatically makes NVRAM accessible in the virtual address space of a process, either implicitly as high capacity volatile memory (anonymous memory) or as named persistent data accessible via the standard file system interface. In addition, an “expert interface” is offered in order to break this transparency if desired and thereby bring the NVRAM or parts thereof under own administration and control. The crucial point here is that despite NVRAM, the processes within this virtual (non-volatile memory) address space are not confronted with inconsistencies resulting from incomplete but interrupted or complete but repeated operations regarding persistent data and do not have to be designed to be fail-safe, respectively: Process-state backup in the event of an interrupt makes the power failure functionally transparent!

The targeted VM subsystem transparently migrates pages between the two types of memory according to access statistics during normal operation and ensures that modified pages are never propagated back into the storage hierarchy before a consistent, recoverable state is reached in NVRAM. In serious exceptional cases, such as a power fail, all dirty pages related to logically persistent data that currently reside in fast DRAM are flushed back into NVRAM. Static and dynamic analysis of the width of the remaining energy window on the one hand and the duration of the backup of the entire relevant volatile system state on the other hand are used to define parameters that guarantee the backup process. Based on these parameters, the VM subsystem always ensures an upper bound of modified NVRAM pages in the cache to guarantee fail-safety. Last but not least, the availability of such an NVRAM page cache should also facilitate and accelerate the management of typical persistent metadata of an operating system. This primarily concerns metadata for restarting the system, the VM metadata related to non-volatile memory regions but also metadata of a file system that usually ensures the persistence of data in the computer system. In contrast to a solution which is cast in hardware or corresponds to a combined suspend to RAM/disk model, PAVE is anchored in the operating system, allows using large amounts of NVRAM just like DRAM, and pursues a needs-based approach, making it more flexible and transferable to common computer systems of almost any size – assuming a stock paging MMU.

Transparency for legacy software

PAVE will provide an operating-system platform that allows billions of lines of legacy code to benefit from NVRAM immediately and transparently as high capacity main memory and I/O-intensive applications implicitly get access to large amounts of readily available file data cached in NVRAM. Modified file data held in the persistent VM page cache simultaneously doubles as an implicit redo log without extra costs when all operations on a file completed successfully. In this case, the changes can be propagated from NVRAM to the underlying storage hierarchy any time the system sees fit, such as in times of low system activity or if the amount of available NVRAM is getting low. Otherwise, all changes can simply be dropped and the persistent data are automatically reverted to the last consistent state. Thus, common open()/close() operations receive transaction-like semantics as long as the underlying file system does not crash and corrupt the file’s data.

We will integrate the above mentioned mechanisms and strategies into the VM subsystem of FreeBSD rather than implementing a complete new system from scratch. Our choice fell onto FreeBSD because it provides an efficient and mature VM subsystem with a well documented VM design rooted in the Mach VM subsystem. The latter offers a clear distinction between hardware dependent MMU tables and the logical structure of address spaces. This design significantly eases our approach to use minimally invasive changes to make the VM subsystem NVRAM-aware. In particular, we intend to reuse most existing and highly-tuned mechanisms like the gathering of access statistics and replacement strategies readily available in FreeBSD without disturbing its complex “VM machinery”. The VM metadata structures related to the page cache (such as data describing the resident set of pages) then needs to be kept persistent as well and manipulated in a highly-efficient, transactional manner.

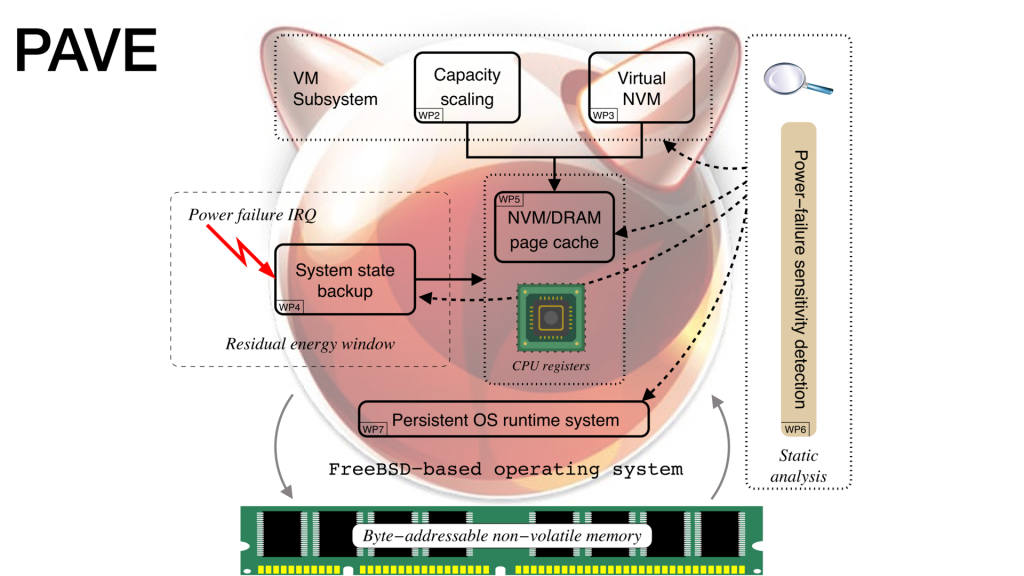

Overview chart

As just mentioned,

the approach followed with PAVE as outlined here uses FreeBSD as the base operating system.

The adjacent figure roughly outlines the PAVE function blocks.

First of all, capacity scaling of the main memory, in order to maintain the growth in performance and enable new applications.

Right next to it is NVRAM virtualisation, that is, the provision of a system abstraction in order to hide the shallows of NVRAM from machine programs.

Both modules use a DRAM-based cache of the working set of pages of the processes running virtually directly in the NVRAM. A computing system is assumed that needs DRAM only to hide the higher latency or access times that still exist with NVRAM.

Right next to it is NVRAM virtualisation, that is, the provision of a system abstraction in order to hide the shallows of NVRAM from machine programs.

Both modules use a DRAM-based cache of the working set of pages of the processes running virtually directly in the NVRAM. A computing system is assumed that needs DRAM only to hide the higher latency or access times that still exist with NVRAM.

The physically volatile but logically non-volatile contents of this page cache together with the volatile contents of the CPU registers and hardware caches form the system state that is backed up to the NVRAM in the event of a power failure (REDOS). It is to be guaranteed that the time required to back up this state must not exceed the time for which the residual energy window of the power supply unit will keep the computing system alive. For this purpose, both the energy costs of the backup procedure and the NVRAM write bandwidth are determined and the electrical characteristics of the computer’s power supply unit is measured. Not least, the FreeBSD-based system software is subjected to a power-failure sensitivity detection that identifies critical instruction sequences whose semantic integrity is endangered by a sudden power failure during write access to NVRAM. These examinations rely on own tools for compiler-based operating-system tailoring as well as static program analysis to predict time and energy costs.

For memory-hierarchy integration appropriate is a two-level hierarchy of software-managed caches that uses NVRAM as a buffer for data in conventional storage and DRAM as a buffer for NVRAM pages. The buffering of the pages in the DRAM is subject to strict time guarantees so that this logically persistent data can be reliably consolidated in the NVRAM again in exceptional cases. That is, in order to survive power failures, the maximum size of this DRAM page cache is to be aligned to the size of the remaining energy window in the power supply.

Last but not least, a persistent runtime system suitable for use within operating-system kernels offers supporting functions to enable the PAVE modules of FreeBSD to be executed directly in the NVRAM. The focus is on abstractions that help critical / sensitive sections run non-blocking or even wait-free.

Project results and findings

The first comprehensive PAVE measure was the “NVRAM-ification“ of FreeBSD, that is, the provision of a FreeBSD that, including the machine programs run by it, operates exclusively from NVRAM. The concept for this was presented at Dagstuhl Seminar 22341 prior to the work, implementation in practice as well as an evaluation of “NVM-only” FreeBSD are documented in a Technical Report and a contribution to ARCS 2023. However, the FreeBSD variant described in these papers is not yet REDOS-ed.

For the customization of FreeBSD investigations ran to the dynamic updating and specialization of programs in general and operating systems in the special one. A basic consideration for this was the question of whether the undoubtedly application-dependent strategies for capacity scaling and NVRAM-page caching – as typical candidates for dynamic reconfiguration at runtime – may thus require dynamic adaptations to the respective call environment, since no prior knowledge is available at FreeBSD build time. Work on such update and specialization techniques could be published at USENIX ATC 2023 and PLOS 2023.

As convenient as FreeBSD’s dynamic customization may be depending on the conditions defined by the particular application profile, it has been shown that such a measure is not absolutely necessary for the already demanding work on PAVE. Rather, such a “nice to have” work for the purpose of PAVE is now being pursued in the context of DOSS.

Ongoing work

Suspend/Resume with NVRAM Based on the FreeBSD kernel fully running in NVRAM, generally applicable suspend-to-NVRAM and resume-from-NVRAM functions are under development, respectively – providing the minimal subset of REDOS system functions. The mechanism will write all volatile CPU state into NVRAM, and shuts down the system upon a suspend request. When powered on, the system the will retrieve the saved state from NVRAM and restore the volatile hardware state. Thus, execution of kernel and userland can resume as if no interruption of service has occurred.

Analysis of IRQ-induced latency Interrupts are a mechanism by which devices can signal the occurrence of events. A possible event can be the imminent power failure caused by a power outage. In order to estimate the response time for handling such an event, it is necessary to estimate the possible delay in the interrupt handling routine. This work, which is required for REDOS, allows an indication of the amount of time still available to save the processor state in a residual power window given at power failure. Delays can be caused by other interrupts being processed or by critical sections and can be identified by the CPU masking all incoming interrupt requests. These sections can be identified in the source code and may even be assigned a maximum amount of cost they will incur before a power failure interrupt can be serviced.

Related projects

The sister project NEON looks at stripping operating systems of persistence measures that are unnecessary if the operating system in question runs entirely from NVRAM. The subject of the investigation is the additional effort of such measures typical for volatile main memory (e.g. DRAM) during runtime in terms of time, energy and space. Through such purification of an NVM-only operating system, lower background noise (less time/energy consumption) and a smaller trusted computing base (fewer lines of code) are expected. This work uses Linux as the base operating system.

The ResPECT project, which focuses on embedded communication systems, is developing a holistic operating system and communication protocol concept, which assumes that the transfer of information (receiving control data for actuators or sending sensor data) is the core task of almost all networked nodes.

What all three projects have in common is the approach to a residual energy dependent NVRAM-based operation shutdown (REDOS).

Project staff

-

Principal investigators:

Jörg Nolte,

Wolfgang Schröder-Preikschat

Postgraduates: Oliver Giersch, Dustin Nguyen

Student assistants: Karl Bartholomäus, Ole Wiedemann